Global ransomware attack may be bigger than hackers intended

Tech Take: Alex Hamerstone and Malcolm Harkins share insight into the massive WannaCry ransomware attack that infected computers worldwide

Ransomware can be a deadly scourge for companies and individual users.

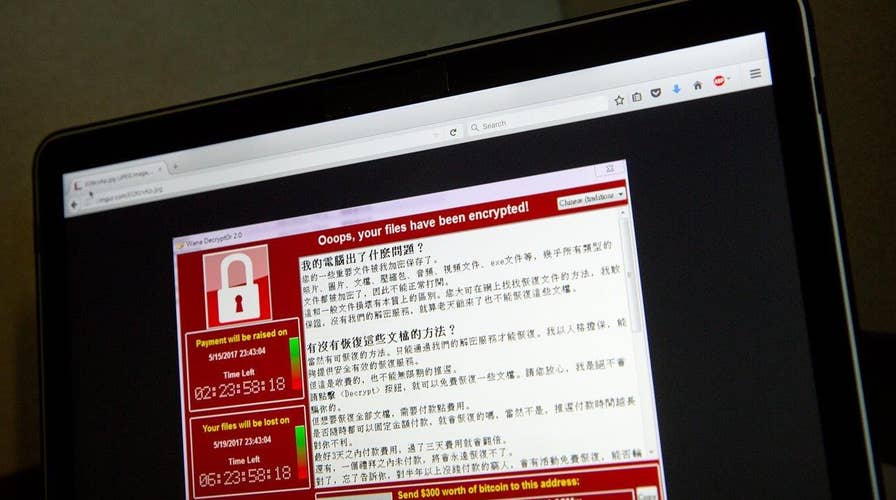

If you don’t believe that, just ask emergency room workers in the UK. Last week, the WannaCry ransomware attack crippled their network -- one report suggested people with life-threatening injuries were told not to come to the hospital. Another said ambulances were redirected.

Seeing a ransomware message on a computer screen is also a major source of stress. The computer suddenly becomes under the control of a foreign entity, one that starts demanding a payment -- usually in Bitcoin to protect their identity -- to release locally-stored files and apps.

FACEBOOK FINED $122M OVER WHATSAPP PRIVACY DISPUTE

But now, researchers have another possible solution.

In the future, security systems could use artificial intelligence to monitor user behavior, track activity, suggest when there may be a danger and even mount an attack against the ransomware purveyors, effectively rendering the deadly malware client inoperable.

“AI helps organizations understand the network and its activity,” says Jason Kichen, the Director of Cybersecurity Services at Versive. “It can then clearly articulate to the security team what the problem is, so that those teams can make informed decisions.”

Raja Mukerji, the cofounder and Chief Customer Officer at ExtraHop Networks, equates how an AI can block ransomware to how airport security stops people from using water bottles.

Today, everyone has to drop their water bottle in a bin. Current computer security systems tend to use the same approach -- all traffic is monitored and blocked. Yet, harmful agents still sneak through, as is the case with the WannaCry virus. A new technique using AI in airport security would not block all water bottles. It would be smart enough to identify behavior.

CYBERATTACKS THREATEN OUR NATIONAL SECURITY AND ECONOMY

At the airport, an AI might use computer vision technology to know when someone acts nervous and twitchy, or why the same person keeps approaching different checkpoints. Similarly, an AI could look for strange activity on your computer -- a Windows app that doesn’t belong there, an email from someone you don’t know with an attachment, or something else malicious.

“AI looks for patterns of contextual irregularity in people’s behavior,” explains Mukerji. “It’s all about preventing a compromise, but doing so in a dynamic, adaptable manner.”

Kichen says AI could add significant value. Often, a ransomware attack such as WannaCry uses social engineering -- someone working the graveyard shift in ER clicks on an attachment and cripples the network. Suddenly, the computer is hijacked. An AI would know an incredible amount of information -- that the workers is tired and overworked, that he or she is not computer-savvy, that the server used to send an email is from a known hacker.

Interestingly, some of this machine learning is already in use, analyzing network traffic. However, where an AI really helps is in predicting imminent threats.

Marty Kamden, a spokesperson for NordVPN, says the greatest dangers are those we know nothing about. Yet, there is a vast treasure trove of information about previous attacks, techniques, and success. An AI could learn and adapt faster than any human.

In the end, that could save lives. Especially in an emergency room.