How ChatGPT could replace textbooks and rewrite higher education

A professor says AI chatbot software, such as ChatGPT, could restructure postsecondary education by replacing some textbooks and promoting critical thinking.

The latest version of ChatGPT, the artificial intelligence chatbot from OpenAI, is smart enough to pass a radiology board-style exam, a new study from the University of Toronto found.

GPT-4, which launched officially on March 13, 2023, correctly answered 81% of the 150 multiple-choice questions on the exam.

Despite the chatbot’s high accuracy, the study — published in Radiology, a journal of the Radiological Society of North America (RSNA) — also detected some concerning inaccuracies.

"A radiologist is doing three things when interpreting medical images: looking for findings, using advanced reasoning to understand the meaning of the findings, and then communicating those findings to patients and other physicians," explained lead author Rajesh Bhayana, M.D., an abdominal radiologist and technology lead at University Medical Imaging Toronto, Toronto General Hospital in Toronto, Canada, in a statement to Fox News Digital.

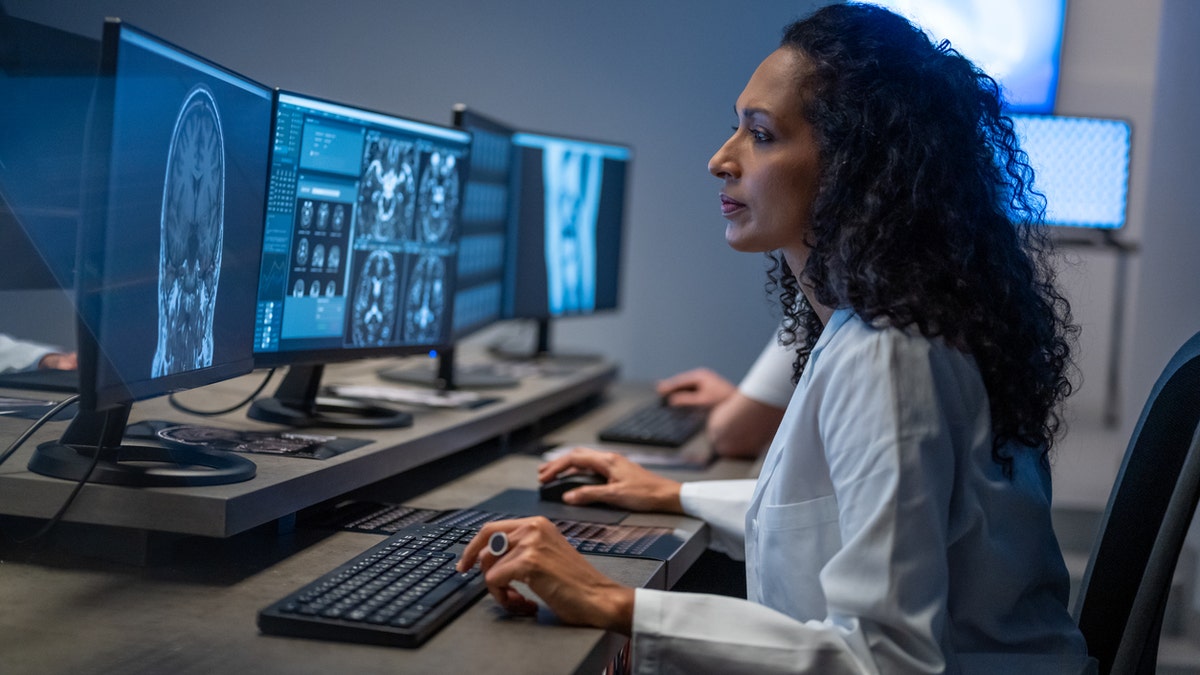

The latest version of ChatGPT, the artificial intelligence chatbot from OpenAI, is smart enough to pass a radiology board-style exam, a new study from the University of Toronto has found. (iStock)

"Most AI research in radiology has focused on computer vision, but language models like ChatGPT are essentially performing steps two and three (the advanced reasoning and language tasks)," he went on.

"Our research provides insight into ChatGPT’s performance in a radiology context, highlighting the incredible potential of large language models, along with the current limitations that make it unreliable."

CHATGPT FOR HEALTH CARE PROVIDERS: CAN THE AI CHATBOT MAKE THE PROFESSIONALS' JOBS EASIER?

The researchers created the questions in a way that mirrored the style, content and difficulty of the Canadian Royal College and American Board of Radiology exams, according to a discussion of the study in the medical journal.

(Because ChatGPT doesn’t yet accept images, the researchers were limited to text-based questions.)

The questions were then posed to two different versions of ChatGPT: GPT-3.5 and the newer GPT-4.

‘Marked improvement’ in advanced reasoning

The GPT-3.5 version of ChatGPT answered 69% of questions correctly (104 of 150), near the passing grade of 70% used by the Royal College in Canada, according to the study findings.

It struggled the most with questions involving "higher-order thinking," such as describing imaging findings.

"A radiologist is doing three things when interpreting medical images: looking for findings, using advanced reasoning to understand the meaning of the findings, and then communicating those findings to patients and other physicians," said the lead author of a new study (not pictured). (iStock)

As for GPT-4, it answered 81% (121 of 150) of the same questions correctly — exceeding the passing threshold of 70%.

The newer version did much better at answering the higher-order thinking questions.

"The purpose of the study was to see how ChatGPT performed in the context of radiology — both in advanced reasoning and basic knowledge," Bhayana said.

GPT-4 answered 81% of the questions correctly, exceeding the passing threshold of 70%.

"GPT-4 performed very well in both areas, and demonstrated improved understanding of the context of radiology-specific language — which is critical to enable the more advanced tools that radiology physicians can use to be more efficient and effective," he added.

The researchers were surprised by GPT-4's "marked improvement" in advanced reasoning capabilities over GPT-3.5.

"Our findings highlight the growing potential of these models in radiology, but also in other areas of medicine," said Bhayana.

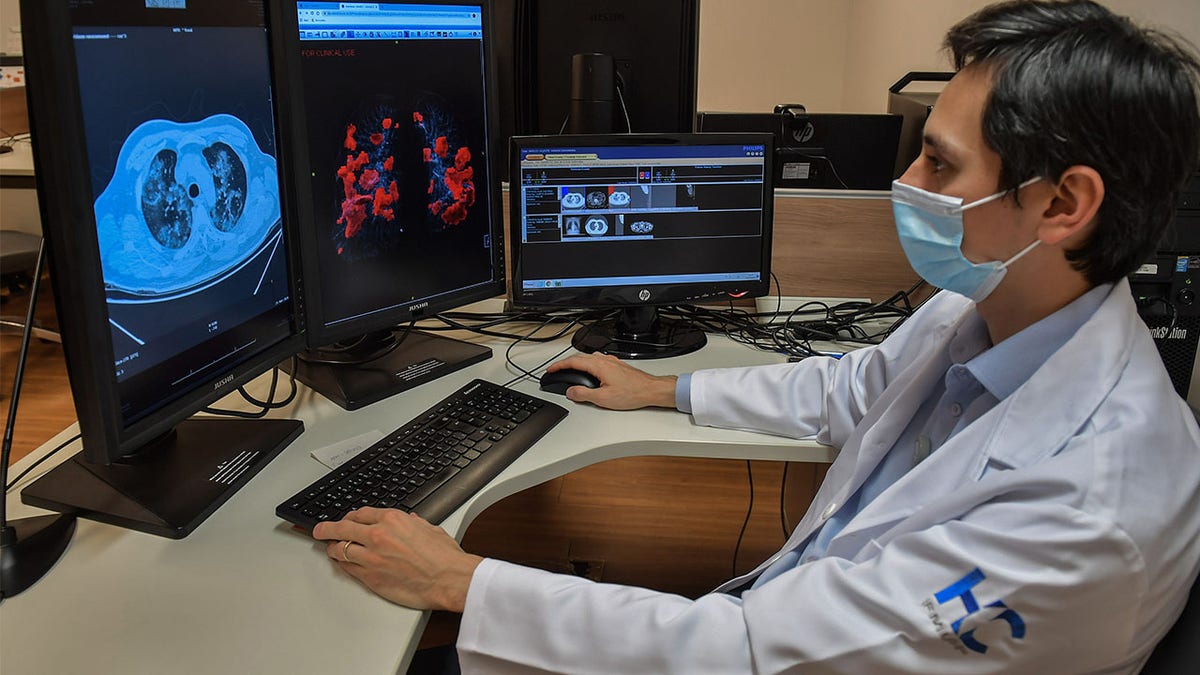

"Our findings highlight the growing potential of these models in radiology, but also in other areas of medicine," said the lead author of a new study. (NELSON ALMEIDA/AFP via Getty Images)

Dr. Harvey Castro, a Dallas, Texas-based board-certified emergency medicine physician and national speaker on artificial intelligence in health care, was not involved in the study but reviewed the findings.

"The leap in performance from GPT-3.5 to GPT-4 can be attributed to a more extensive training dataset and an increased emphasis on human reinforcement learning," he told Fox News Digital.

"This expanded training enables GPT-4 to interpret, understand and utilize embedded knowledge more effectively," he added.

CHATGPT AND HEALTH CARE: COULD THE AI CHATBOT CHANGE THE PATIENT EXPERIENCE?

Getting a higher score on a standardized test, however, doesn't necessarily equate to a more profound understanding of a medical subject such as radiology, Castro pointed out.

"It shows that GPT-4 is better at pattern recognition based on the vast amount of information it has been trained on," he said.

Future of ChatGPT in health care

Many health technology experts, including Bhayana, believe that large language models (LLMs) like GPT-4 will change the way people interact with technology in general — and more specifically in medicine.

"They are already being incorporated into search engines like Google, electronic medical records like Epic, and medical dictation software like Nuance," he told Fox News Digital.

"But there are many more advanced applications of these tools that will transform health care even further."

"The leap in performance from GPT-3.5 to GPT-4 can be attributed to a more extensive training dataset and an increased emphasis on human reinforcement learning," Dr. Harvey Castro, a board-certified emergency physician and national speaker on AI in health care, told Fox News Digital. (Jakub Porzycki/NurPhoto)

In the future, Bhayana believes these models could answer patient questions accurately, help physicians make diagnoses and guide treatment decisions.

Honing in on radiology, he predicted that LLMs could help augment radiologists’ abilities and make them more efficient and effective.

"We are not yet quite there yet — the models are not yet reliable enough to use for clinical practice — but we are quickly moving in the right direction," he added.

Limitations of ChatGPT in medicine

Perhaps the biggest limitation of LLMs in radiology is their inability to interpret visual data, which is a critical aspect of radiology, Castro said.

Large language models (LLMs) like ChatGPT are also known for their tendency to "hallucinate," which is when they provide inaccurate information in a confident-sounding way, Bhayana pointed out.

"The models are not yet reliable enough to use for clinical practice."

"These hallucinations decreased in GPT-4 compared to 3.5, but it still occurs too frequently to be relied on in clinical practice," he said.

"Physicians and patients should be aware of the strengths and limitations of these models, including knowing that they cannot be relied on as a sole source of information at present," Bhayana added.

"Physicians and patients should be aware of the strengths and limitations of these models, including knowing that they cannot be relied on as a sole source of information at present." (Frank Rumpenhorst/picture alliance via Getty Images)

Castro agreed that while LLMs may have enough knowledge to pass tests, they can’t rival human physicians when it comes to determining patients’ diagnoses and creating treatment plans.

"Standardized exams, including those in radiology, often focus on 'textbook' cases," he said.

"But in clinical practice, patients rarely present with textbook symptoms."

CLICK HERE TO GET THE FOX NEWS APP

Every patient has unique symptoms, histories and personal factors that may diverge from "standard" cases, said Castro.

"This complexity often requires nuanced judgment and decision-making, a capacity that AI — including advanced models like GPT-4 — currently lacks."

CLICK HERE TO SIGN UP FOR OUR HEALTH NEWSLETTER

While the improved scores of GPT-4 are promising, Castro said, "much work must be done to ensure that AI tools are accurate, safe and valuable in a real-world clinical setting."