Fox News Flash top headlines for May 2

Fox News Flash top headlines are here. Check out what's clicking on Foxnews.com.

Using AI technology to read mammograms and assist in making diagnoses could put patients at risk, a new study is revealing.

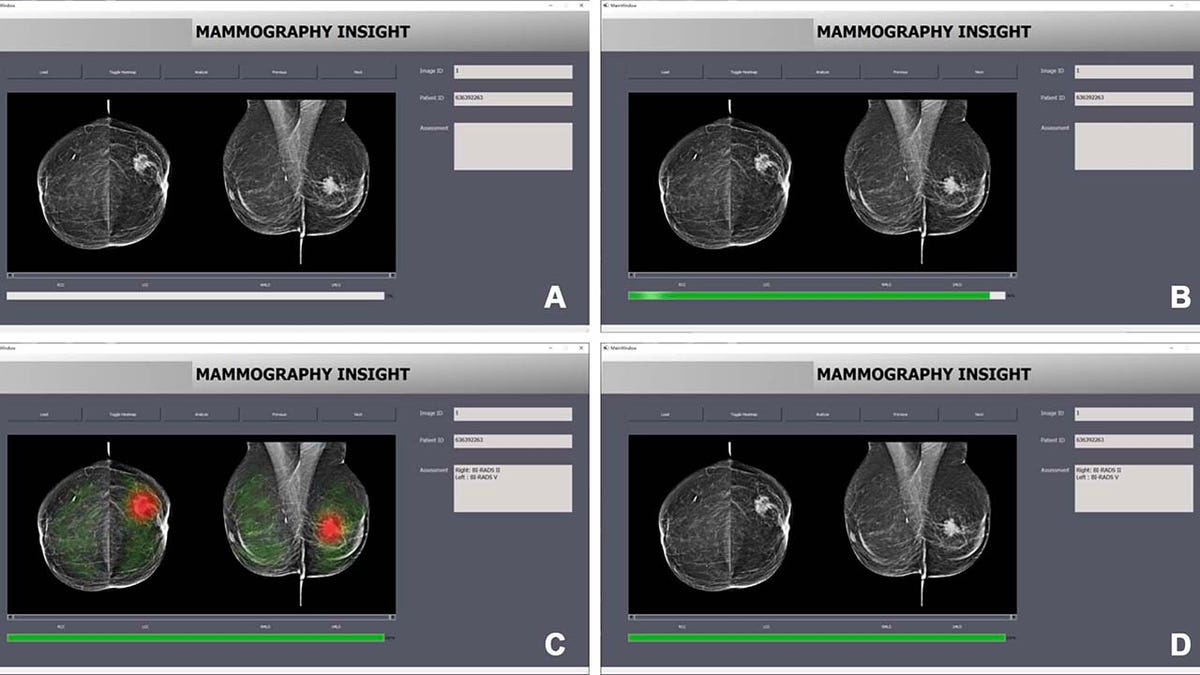

Often touted as a "second set of eyes" for radiologists, AI-based mammographic support systems are "extremely promising," said news agency SWNS.

But as the technology grows and expands, there are concerns among some that it may make radiologists "favor the AI’s suggestion over their own," the agency added.

REVOLUTIONIZING BREAST CANCER DETECTION: THE POWER OF AI

The Institute of Diagnostic and Interventional Radiology at the University Hospital Cologne, Germany, conducted the study.

As part of the study, 27 radiologists read 50 mammograms and provided their Breast Imaging Report and Data System (BI-RADS) assessment to categorize breast imagining findings.

The AI system’s prediction had a significant impact on the accuracy of every group of radiologists.

The 50 mammogram findings were split up into two random sets — one with 10 reports and the other with 40, according to SWNS.

The reports contained a mix of correct and incorrect BI-RADS category suggestions by AI.

A new study found that AI is not always accurate — and can negatively influence radiologists' judgment when it comes to making breast cancer diagnoses. (SWNS)

The AI system’s prediction had a significant impact on the accuracy of every group of radiologists (inexperienced, moderately experienced and very experienced). Readers were more likely to assign an incorrect BI-RADS category when the AI system suggested the same category — and vice versa, as HealthImaging.com pointed out in its analysis of the study.

NEW GOOGLE AI TOOL DETECTS BREAST CANCER BETTER THAN RADIOLOGISTS, STUDY SUGGESTS

The study concluded that when radiologists used AI-suggested categories to assign BI-RADS scores, they — the radiologists — performed worse than when they did it on their own.

Even experienced radiologists, with an average of over 15 years of experience, saw their accuracy fall from 82% to roughly 45% when the AI technology suggested a different category, the study found.

Even experienced radiologists were negatively influenced when giving BI-RADS category results, the study found. (iStock)

Lead author Dr. Thomas Dratsch told SWNS that the researchers anticipated inaccurate AI predictions would influence radiologists' judgment.

"It was surprising to find that even highly experienced radiologists were adversely impacted by the AI system’s judgments, albeit to a lesser extent than their less seasoned counterparts," he said.

The study concluded that humans should use AI with caution.

"Our findings emphasize the need for implementing appropriate safeguards when incorporating AI into the radiological process, to mitigate the negative consequences of automation bias," he told SWNS.

ChatGPT has been known to have glitches — including giving false information in some situations. (Gabby Jones/Bloomberg via Getty Images)

The study findings were published in the journal Radiology.

ChatGPT is one of the most popular forms of AI.

CLICK HERE TO SIGN UP FOR OUR LIFESTYLE NEWSLETTER

Users can ask the computer-generated bot any question and receive an answer almost immediately.

A new study revealed that AI technology should be used with caution by radiologists when it comes to analyzing mammograms. (iStock/SWNS)

In a recent study by the University of Maryland School of Medicine (UMSOM), researchers asked ChatGPT the same question three times — and found that 22 out of 25 breast cancer screening-related questions were answered correctly by the AI bot.

CLICK HERE TO GET THE FOX NEWS APP

However, the study found that ChatGPT gave different answers for the same question in their three replies — and gave outdated information in general.

Fox News Digital's Melissa Rudy contributed to this report.