Experts weigh benefits, concerns surrounding AI-driven facial recognition technology

AI strategist Lisa Palmer and privacy consultant Jodi Daniels discuss privacy concerns around the acquisition of biometric data.

A significant expansion in Artificial intelligence (AI) facial recognition technology is increasingly being deployed to catch criminals, but experts express concern about the impact on personal privacy and data.

According to the Allied Market Research data firm, the facial recognition industry, which was valued at $3.8 billion in 2020, will have grown to $16.7 billion by 2030.

Lisa Palmer, an AI strategist, said it is important to understand that an individual's data largely feeds what happens from an AI perspective, especially within a generative framework.

While there has been data recorded on citizens for decades, today's surveillance is different because of the quantity and quality of the data recorded as well as how it's being used, according to Palmer.

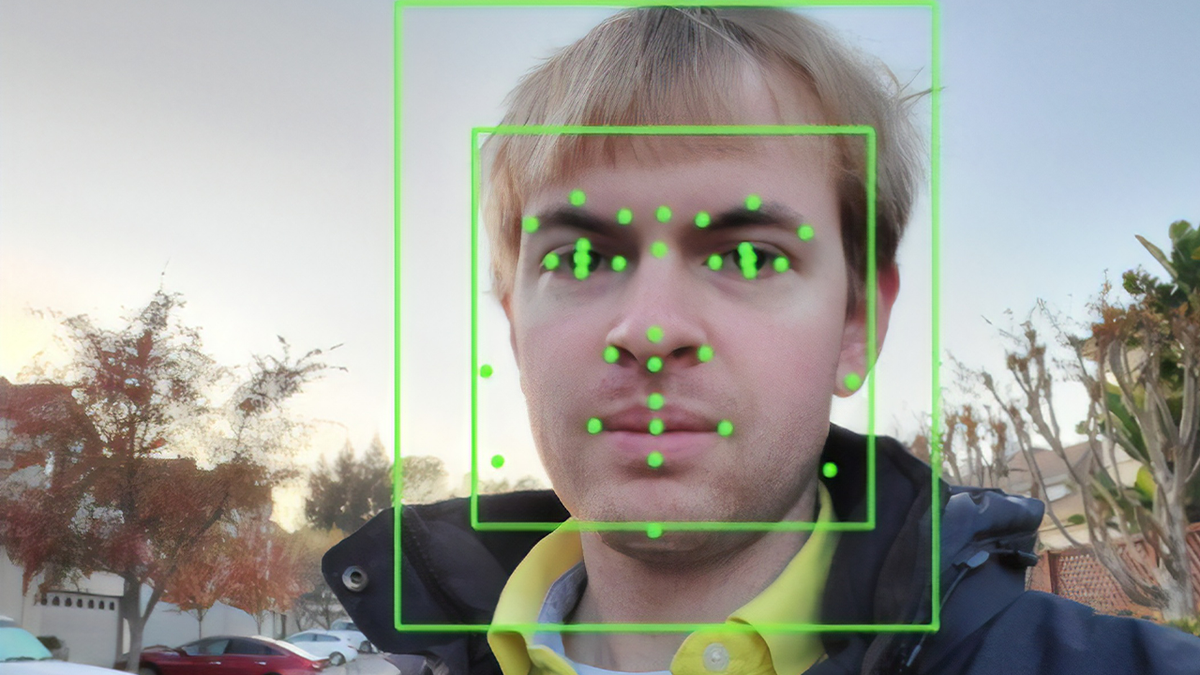

Output of an Artificial Intelligence system from Google Vision, performing Facial Recognition on a photograph of a man, with facial features identified and facial bounding boxes present, San Ramon, California, November 22, 2019. (Smith Collection/Gado/Getty Images)

"When you go to the airport, you are being recorded, you are being videoed from the moment that you cross onto that property throughout your entire experience and until you leave on the other end of that, that is all happening from a video perspective," Palmer said. "It's also happening from an audio perspective, which people often are unaware that their conversations are actually being recorded in many situations as well."

On the positive side of things, Palmer noted that this framework enables the government to identify criminals when they are traveling from one location to the next and preemptively coordinate that information across different policing bodies to mitigate harm.

"On the flip side of that, as an individual person, how comfortable are you that the government knows every move that you're making when you enter into any transportation arm in the United States? How comfortable are you with that? It's happening," Palmer added. "You are being identified. Your face is being stored. These things are very real. It's not something that's futuristic. It's happening today."

Palmer also noted that predictive policing is often a source of tremendous bias in facial recognition technology. In these instances, some AI systems tend to identify persons of color or people from underrepresented groups more frequently.

For example, a person could be walking where government-installed cameras watch and look for situations where crime may occur. As a result of that, they could misidentify somebody and send police to that location because they think it is a criminal with a warrant out for them.

POLICE ARE USING INVASIVE FACIAL RECOGNITION SOFTWARE TO PUT EVERY AMERICAN IN A PERPETUAL LINEUP

A man in a mask attends a protest against the use of police facial recognition cameras at the Cardiff City Stadium for the Cardiff City v Swansea City Championship match on January 12, 2020 in Cardiff, Wales. Police are using the technology to identify those who have been issued with football banning orders in an attempt to prevent disorder. (Photo by Matthew Horwood/Getty Images)

The AI tech company Clearview AI, recently made headlines for its misuse of consumer data. It provides facial recognition software to law enforcement agencies, private companies and other organizations. Their software uses artificial intelligence algorithms to analyze images of faces and match them against a database of over 3 billion photos that have been scraped from various sources, including social media platforms like Facebook, Instagram and Twitter, all without the users' permission. The company has already been fined millions of dollars in Europe and Australia for such privacy breaches.

Despite being banned from selling its services to most U.S. companies due to breaking privacy laws, Clearview AI has an exemption for the police. The company's CEO, Hoan Ton-That, says hundreds of police forces across the U.S. use its software.

Critics of the company argue that the use of its software by police puts everyone into a "perpetual police lineup." Whenever the police have a photo of a suspect, they can compare it to your face, which many people find invasive. It also raises questions about civil liberties and civil rights and has falsely identified people despite a typical high accuracy rate.

"Biased artificial intelligence, particularly machine learning, often happens because the data that has been fed into it is either incomplete or unbalanced. So, if you have a data set that has a huge number of Caucasian faces, white faces in it, and it doesn't have those that have more that have darker skin tones in the data stores, then what happens is the artificial intelligence learns more effectively on the larger dataset. So, the smaller dataset gets gets less training, less learning," Palmer said.

To address imbalances in an AI dataset, Palmer said the potential use of synthetic data could balance the dataset and therefore reduce the inherent machine learning bias.

HOW TO REIN IN THE AI THREAT? LET THE LAWYERS LOOSE

Synthetic data is information that is manufactured by an AI rather than real-world events. For example, an AI can create a clean data set, like images of non-existent people, from scratch. This data can then be plugged into the model and used to improve the existing algorithm, which may or may not already include data from real individuals.

But Palmer stressed that it is "extremely difficult" to remove all bias from training data because the data is created by humans, who all have an inherent bias.

One of the biggest challenges with AI training, according to Palmer, is trying to identify what is not there.

"Well, it's not very simple, but it's much simpler to identify data that is flawed than to identify data that is fully missing," Palmer said. "So, in order to do so, if you bring a diverse perspective of people, lots of different lived experiences in their background into conversations where those products are created, it's much easier to have all of those different viewpoints say, 'oh, you didn't even think about this, or you didn't think about that.' And that's how we make sure that we are using both technology and people together to come to make the best possible solutions."

Certified Information Privacy Professional Jodi Daniels agreed that profiling is a significant concern associated with facial recognition systems.

She highlighted how Flock Safety ALPR cameras are one of several systems that work with police departments, neighborhood watches and private customers to create "hot lists," which generate alarms that run all license plates against state law enforcement watch lists and the FBI criminal database.

SEE ALL THE POLICE SURVEILLANCE TOOLS USED IN YOUR CITY

An image of an automatic number-plate recognition surveillance camera. (Niall Carson/PA Images via Getty Images)

"Let's just take a neighborhood. It's monitoring the license plate in and out of the neighborhood," Daniels said. "The person who set up the neighborhood now has access to that. Well, do I really want the neighborhood person knowing my in and out in every time that I'm coming and going? I don't want crime. So, I like the idea of that. But what are the controls in place so that they're not really monitoring every single thing that I'm doing and that they have full access to it?"

Daniels highlighted two pivotal instances that have sparked debate about facial recognition and helped cultivate biometric laws.

In the summer of 2020, law enforcement in San Diego combed through video of Black Lives Matter protests and riots and used it to profile various people.

San Francisco and Oklahoma have already banned the use of facial recognition by law enforcement. In Portland, Oregon, a citywide ban forbids the use of the tech by any group, whether private or public.

Just last month, it was revealed that between 2019 and 2022, several groups of Tesla employees privately shared videos and images recorded by customers' car cameras.

"This is to me, like I didn't say it was okay for you to video me everywhere I went or take a picture and match me up to everything over here. And that is why the laws that are coming into play for biometrics, every single one of them are all opt-in laws because it's considered so sensitive and so personal about me, and what happens if that is used in any kind of way to discriminate against me or used in incorrectly? You know, the systems aren't always perfect," Daniels said.

She added that the same basic concept of privacy extends across our physical movements, handheld devices, and biometric data.

US NAVY TO USE UNMANNED, AI-DRIVEN SHIPS TO COUNTER SMUGGLING, ILLEGAL FISHING

Passengers queue up to pass through the north security checkpoint Monday, Jan. 3, 2022, in the main terminal of Denver International Airport in Denver, Colorado. (AP Photo/David Zalubowski) )

"So, if you think about just the idea of being tracked, it, most people don't love the idea of someone tracking you," Daniels said. "If we took this outside the digital world, and we were on the street, and you had someone following, taking a note at every single thing that you ever did and taking a picture of it all the time. After about five seconds, you probably turn around and say, What are you doing? No one likes that. People don't want someone tracking and stalking and notating every single thing about their life."

Similarly, a person would not want to hand all their online movements and actions on a cell phone or a computer over to another person.

Recently it was discovered that phone spyware had been sold to various governments throughout the world and is meant to be used to spy mainly on journalists, activists, and political opponents.

A report released from Citizen Lab reveals that the spyware, which has been given the name Reign, is being used to monitor the activities of targeted high-profile individuals. The Microsoft Threat Intelligence team was able to analyze the spyware and found that it was provided by the Israeli company QuaDream.

Although Reign has not yet been detected as a threat to the U.S. government, and it doesn't seem to be targeting citizens with low-profile statuses, There have been at least five targeted spyware cases in North America, Central Asia, Southeast Asia, Europe, and the Middle East.

CLICK HERE TO READ MORE AI COVERAGE FROM FOX NEWS DIGITAL

An IDF officer analyzes visual information generated with the help of an artificial intelligence. (IDF Spokesperson unit)

According to Daniels, individuals would also not want their data being used from a biometric facial recognition standpoint near their place of residence. This is the reason why most people do not have cameras in their home (unless they have a babysitter, child or dog they need to watch) but rather outside.

But Daniels stressed that there is a difference in laws and applications between general video surveillance and facial recognition.

In the former, a company or agency uses certain points of data on a Face ID, takes images and extracts the data. A bank may garner biometric data through a fingerprint, or a system may do an ID scan to allow the person to pass through security.

CLICK HERE TO GET THE FOX NEWS APP

"That is a very unique biometric that is special and unique to you. The video surveillance has sort of different notifications typically you're going to find a sign that says you're under video surveillance. And that's a notice kind of situation," Daniels said. "Video surveillance is typically not extracting the facial points out of you and building a facial profile to put in a database to match you up with somebody else."

Kurt Knuttson and CyberGuy report contributed to this article.