How are college students adjusting to artificial intelligence?

College students Tabatha Fajardo, Jay Ram and Kyra Varnavas give their take on the development of AI in the classroom on 'The Story.'

Artificial intelligence experts have advised consumers to use caution and trust their instincts when encountering "hallucinations" from artificial intelligence chatbots.

"The number-one piece is common sense," Kayle Gishen, chief technology officer of Florida-based tech company NeonFlux, told Fox News Digital.

People should verify what they see, read or find on platforms such as ChatGPT through "established sources of information," he said.

AI is prone to making mistakes — "hallucinations" in tech terminology — just like human sources.

The word "hallucinations" refers to AI outputs "that are coherent but factually incorrect or nonsensical," said Alexander Hollingsworth of Oyova, an app developer and marketing agency in Florida.

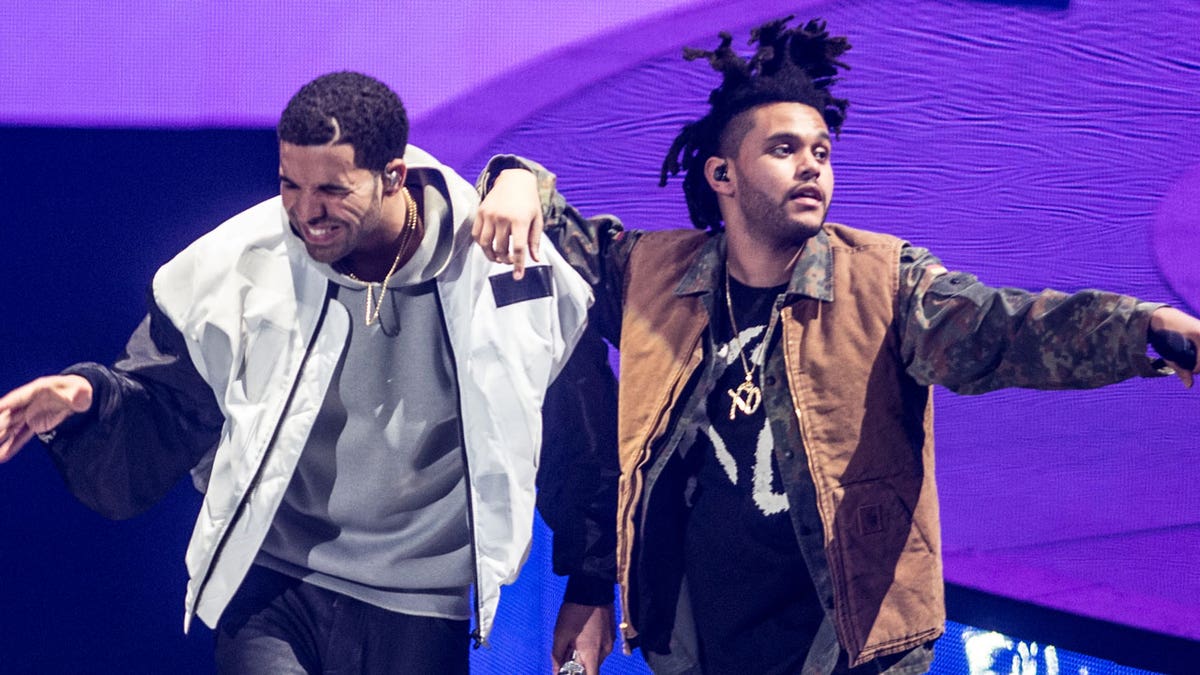

Millions of music fans around the world were duped this week by a new song purporting to performed by Drake, left, and The Weeknd. It was actually an AI-generated deepfake. (Getty Images)

Images, data or information can look and sound highly credible, authentic and manmade. But it may actually be fake or inaccurate.

Hallucinations are accidental — a chatbot simply doesn’t have the correct data, algorithm or information or is learning more about a topic, much as any human would. They're an honest mistake, in other words.

"Hallucinations … are coherent but factually incorrect or nonsensical." — Alexander Hollingsworth, tech expert

But AI can pose other dangers, such as "deepfake" videos and images, which appear real but are generated by artificial intelligence.

AI-GENERATED SONG USING DRAKE AND THE WEEKND VOCALS GOES VIRAL, RAISING LEGAL CONCERNS

Pop stars Drake and The Weeknd made headlines in recent days with the release of the new digital hit "Heart on My Sleeve."

It racked up tens of millions of views on social media within days.

Only one problem: it was a deepfake, generated by a TikTok user with an artificial intelligence program.

Music platforms quickly pulled the song — but not before music fans around the world were fooled.

Journalists watch an introductory video by the "artificial intelligence" anchor Fedha on the Twitter account of Kuwait News service, in Kuwait City on April 9, 2023. (YASSER AL-ZAYYAT/AFP via Getty Images)

When it comes to accidental hallucinations — mistakes made by chatbots — researchers are working to counter the problem.

"Researchers employ a mix of techniques (to detect hallucinations) including input conditioning, rule-based filters, external knowledge sources, and human-in-the-loop evaluation," said Hollingsworth.

"The only robust way to identify chatbot hallucinations is auditing of the AI output by humans." — Sean O’Brien

"Companies and research institutions are constantly refining these techniques to minimize the occurrence of hallucinations and improve AI-generated content quality."

But consumers are at the front lines of combating hallucinations, experts say. Individuals need to judge the validity of AI-generated content and information, just as they would any other.

"The only robust way to identify chatbot hallucinations is auditing of the AI output by humans," Sean O’Brien of the Yale Law School Privacy Lab told Fox News Digital.

"This increases cost and effort and reduces the promised benefits of AI chatbots in the first place. That's especially true if you train human auditors well and give them the research tools they need to discern fact from fiction."

Said Copy.AI co-founder Chris Lu, "Detecting chatbot hallucinations typically involves monitoring the AI's output for inconsistencies or irrelevant information."

He added, "This can be done through a combination of human oversight and automated systems that analyze the generated content. Companies including ours are investing in research and development to improve AI models, making them more robust and less prone to hallucinations."

Deepfakes, especially video, do leave signatures of manipulation that make them possible to detect even with the eye test, said New York-based Victoria Mendoza, CEO of MediaPeanut.

These clues include inconsistencies in audio and video content, odd facial expressions and any quirks or anomalies that seem out of place.

Consumers need to be wary of misinformation from AI chatbots, just as they would any other information source, say experts. (Getty images)

"Detecting chatbot hallucinations involves monitoring the responses of the chatbot and comparing them to the expected responses," Mendoza said.

"This can be done by analyzing the chatbot’s training data, looking for patterns or inconsistencies in its responses, and using machine learning algorithms to detect any anomalies."

TUCKER CARLSON: IS ARTIFICIAL INTELLIGENCE DANGEROUS TO HUMANITY?

Artificial intelligence itself is and will be used to help spot material that’s inauthentic or manipulated.

"Though deepfakes are often jarring and shocking in their realism, software is able to detect deepfakes easily due to anomalies in the image and video files," O’Brien said.

"Deepfakes leave evidence of their manipulation of the original video footage that social media platforms and apps could reasonably scan for. This would allow for flagging of deepfakes, as well as moderation and removal."

"At the end of the day … you shouldn’t be using technology as a crutch. You should be using it as a tool," said Christopher Alexander, chief communications officer for Liberty Blockchain.

"If you’re asking [AI] to research something for you … you really need to double-check that the information is accurate."

Fox News Digital's Kelsey Koberg contributed reporting.