Americans weigh in on a future with AI

Americans in Los Angeles and Austin reveal if they're familiar with artificial intelligence and how how they view the technology's impact on society.

Researchers at the University of Texas at Austin on Monday unveiled an artificial intelligence-powered method to decode brain activity as a person listens to a story or imagines telling a story.

According to a study published May 1 in Nature Neuroscience, researchers used functional magnetic resonance imaging (fMRI) to translate brain activity into a stream of text, under certain conditions.

The study was led by Jerry Tang, UT Austin doctoral student in computer science, and Alex Huth, UT Austin assistant professor of neuroscience and computer science.

The study could be a breakthrough for people who cannot speak but who are mentally conscious, the researchers said.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

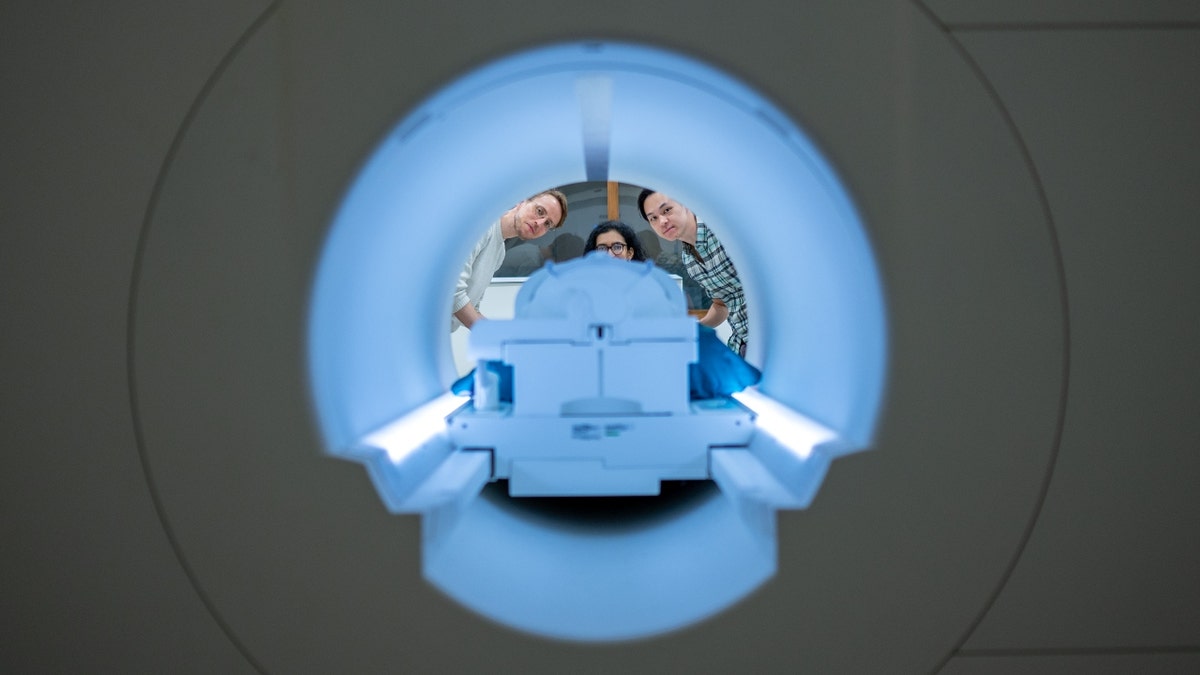

Ph.D. student Jerry Tang prepares to collect brain activity data in the Biomedical Imaging Center at The University of Texas at Austin. The researchers trained their semantic decoder on dozens of hours of brain activity data from participants, collected in an fMRI scanner. (Nolan Zunk/The University of Texas at Austin)

"For a noninvasive method, this is a real leap forward compared to what’s been done before, which is typically single words or short sentences," Huth said. "We’re getting the model to decode continuous language for extended periods of time with complicated ideas."

The fMRI scanner is trained on a semantic decoder, and does not require surgical implants.

There are significant limitations to the method. The stream of text translated by the fMRI scanner is not word for word, but imperfectly spits out phrases or sentences that communicate the gist of the brain activity.

Alex Huth (left), Shailee Jain (center) and Jerry Tang (right) prepare to collect brain activity data in the Biomedical Imaging Center at The University of Texas at Austin. The researchers trained their semantic decoder on dozens of hours of brain activity data from participants, collected in an fMRI scanner. (Nolan Zunk/The University of Texas at Austin)

A participant in the experiment listened to someone say, "I don’t have my driver’s license yet." That person’s brain activity was translated to, "She has not even started to learn to drive yet."

Another participant heard, "I didn’t know whether to scream, cry or run away. Instead, I said, ‘Leave me alone!’" That person’s brain activity was translated to, "Started to scream and cry, and then she just said, ‘I told you to leave me alone.’"

ARTIFICIAL INTELLIGENCE HELPING DETECT EARLY SIGNS OF BREAST CANCER IN SOME US HOSPITALS

Huth and Tang, who are seeking to patent their work, say they recognize that the technology could potentially be used maliciously, and they want government regulation.

Alex Huth (left), discusses the semantic decoder project with Jerry Tang (center) and Shailee Jain (right in the biomedical imaging center at The University of Texas at Austin. (Nolan Zunk/The University of Texas at Austin)

"I think right now, while the technology is in such an early state, it’s important to be proactive by enacting policies that protect people and their privacy," Tang said. "Regulating what these devices can be used for is also very important."

CLICK HERE TO GET THE FOX NEWS APP

They say it would not be possible to use the technology on someone without their knowledge, and that those who participated in training were able to "resist" brain decoding by thinking of something other than the given stimulus.