Fox News Flash top headlines for August 14

Fox News Flash top headlines for August 14 are here. Check out what's clicking on FoxNews.com

Amazon can tell when you're afraid.

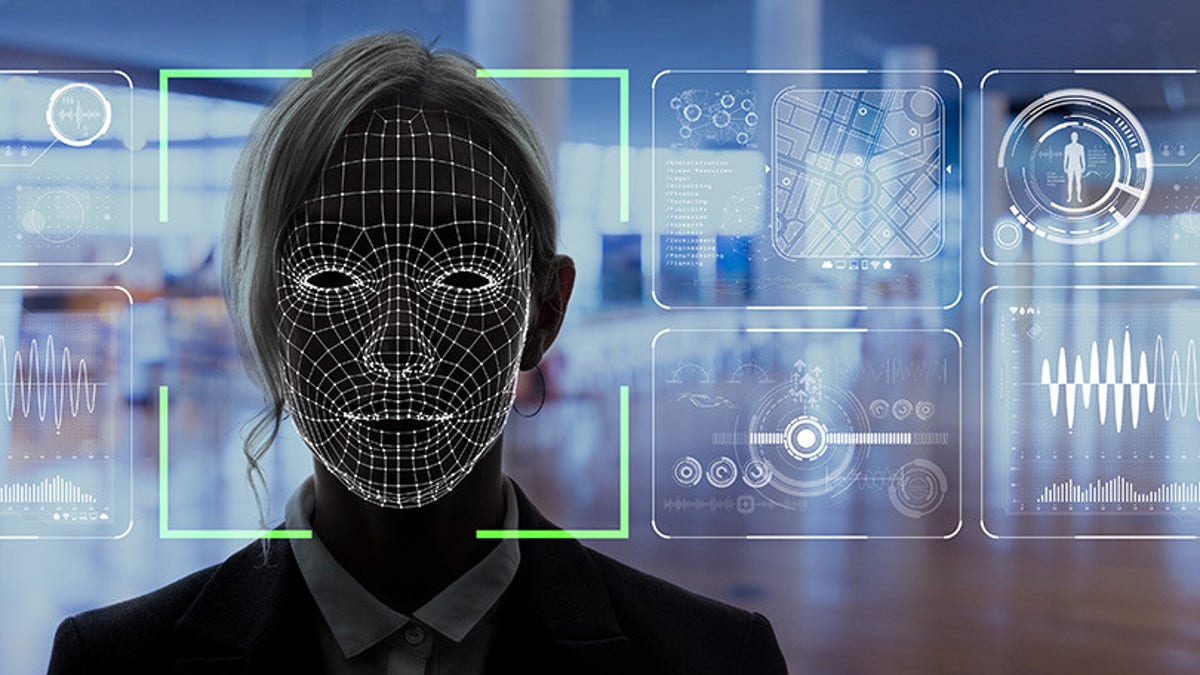

In a Monday blog post, the tech giant led by CEO Jeff Bezos announced that its facial Rekognition software has added a new emotion that it can detect -- fear -- in addition to happy, sad, angry, suprised, disgusted, calm and confused.

The company also wrote that it has improved the accuracy of gender identification and age range estimation.

The controversial facial detection software, which falls under the auspices of the cloud computing division known as Amazon Web Services, has drawn condemnation from privacy and digital rights activists, and lawmakers, who object to Amazon's marketing of Rekognition to police departments and goverment agencies like Immigration and Customs Enforcement.

AMAZON'S CHOICE UNDER FIRE AMID WIDESPREAD FRAUD IN RECOMMENDATIONS

SANDERS BLASTS BEZOS-OWNED WASHINGTON POST OVER BIAS IN ECHO OF TRUMP

"Amazon is going to get someone killed by recklessly marketing this dangerous and invasive surveillance technology to goverments," Evan Greer, deputy director of Fight for the Future, told Fox News via email. "Facial recognition already automates and exacerbates police abuse, profiling and discrimination. Now Amazon is setting us on a path where armed goverment agents could make split-second decisions based on a flawed algorithm's cold testimony."

A recent test by the American Civil Liberties Union (ACLU) of Amazon's face recognition software found it falsely matched 26 California state lawmakers, or more than 1 in 5, to images from a set of 25,000 public arrest photographs. Over half of the false positives were people of color, according to the ACLU.

During a Tuesday press conference announcing the study, California Assemblyman Phil Ting, a Democrat, said the test demonstrates that the software should not be widely used. Ting, who is Chinese-American, is one of the lawmakers who was falsely identified. He's co-sponsoring a bill to ban facial recognition technology from being used on police body cameras in California.

A similar test of Rekognition in June 2018 found the software wrongly tagged 28 members of Congress as suspects, 40 percent of whom were people of color.

FTC CHIEF IS WILLING TO BREAK UP BIG TECH COMPANIES

Ting explained: “While we can laugh about it as legislators, it’s no laughing matter if you are an individual who’s trying to get a job, if you’re an individual trying to get a home, if you get falsely accused of an arrest.”

Amazon has previously said that it encourages law enforcement agencies to use 99 percent confidence ratings for public safety applications of the technology.

CLICK HERE FOR THE FOX NEWS APP

"When using facial recognition to identify persons of interest in an investigation, law enforcement should use the recommended 99 percent confidence threshold, and only use those predictions as one element of the investigation (not the sole determinant)," the company said in a blog post earlier this year.

However, activists and technology experts have said that in real-world scenarios, that 99 percent guidance is not necessarily followed.

“If you get falsely accused of an arrest, what happens?” Ting said at the press conference. “It could impact your ability to get employment, it absolutely impacts your ability to get housing. There are real people who could have real impacts.”