(FoxNews.com)

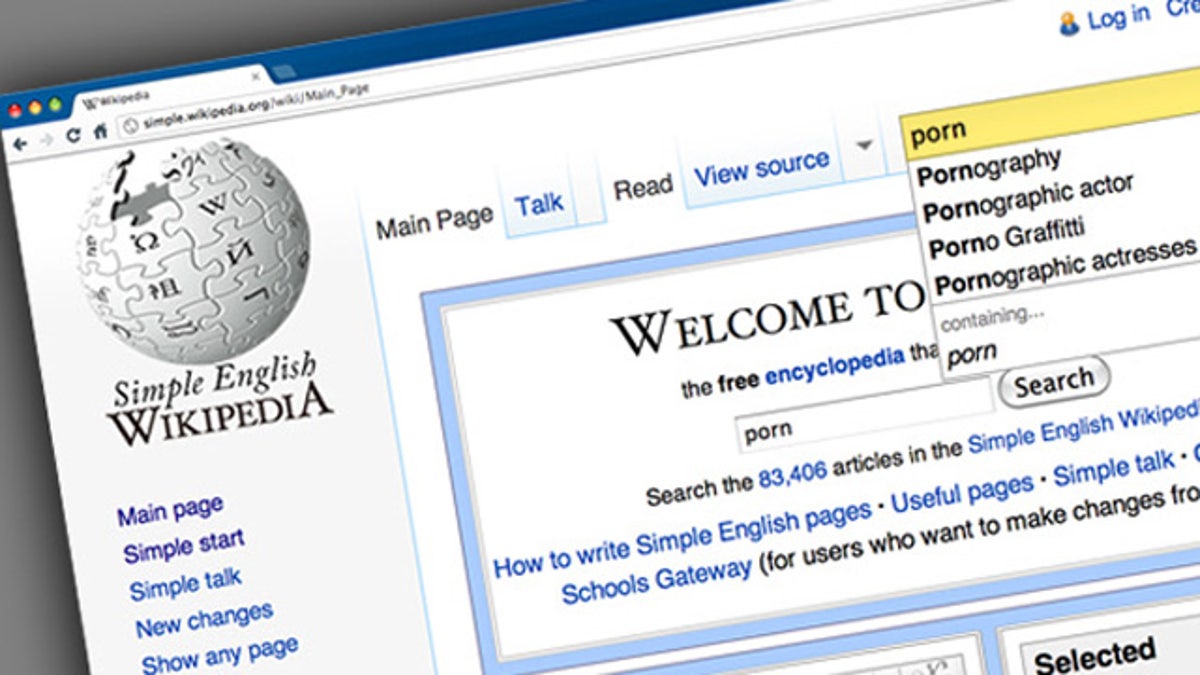

Wikipedia has turned down a more or less free offer for software that would keep minors and unsuspecting web surfers from stumbling upon graphic images of sex organs, acts and emissions, FoxNews.com has learned -- sexually explicit images that remain far and away the most popular items on the company's servers.

Representatives for NetSpark, an Israeli-based Internet-solution company, say they have developed a filtering technology that not only detects and blocks questionable imagery, but is also able to learn over time, making it ideal for determining the appropriateness of an image. The company offered its services to the Wikimedia Foundation -- which has yet to return its calls.

“I contacted them a couple of times back in July and August, but never heard back from them. It seems like it’s not a priority for them at this point,” Sarah Minchom, a product and marketing manager for NetSpark, said to FoxNews.com.

Maybe it should be. A website listing the most viewed content on Wikimedia Commons, the image-hosting arm of the Internet encyclopedia, reveals that virtually all are sexually explicit photographs and categories.

'It seems like it’s not a priority for them at this point.'

NetSpark claims that its Graphic Inspection filter is “the strongest solution available in the market.” The company says its technology is a “learning engine” that can analyze the components of an image precisely enough to determine the difference between a pornographic image and an advertorial image that has models wearing swimwear or lingerie.

- Philip Roth unable to correct Wikipedia entry on his own book ‘The Human Stain’

- Nokia admits still images from Lumia 920 also faked

- Reservoir from time of King Solomon found in Jerusalem

- Astronaut ‘touches’ the sun in spacewalk photo

- Lab-grown ears, bionic arms among cutting-edge treatments aiding wounded troops

- The best tech for back-to-school

- 15 devices your iPhone replaces

And it works, said Larry Sanger, one of the original founders of Wikipedia.

“It actually works quite well. I was pretty impressed,” Sanger, who is unconnected with the filtering company, told FoxNews.com. The former philosophy professor keeps close tabs on Wikipedia and its governance, despite leaving the company in 2002 and later founding Citizendium, a competing open-source encyclopedia.

He has long been a critic of Wikipedia for not taking a more proactive approach to the issue of questionable material on the site. Filters would solve the problem, he said.

“If Wikipedia made the site available with this filter or something similar, then a lot of my objections would disappear,” he said, adding that he has worked closely with NetSpark on how to get Wikipedia to use the web filtering technology.

“The idea we discussed was that NetSpark would either donate or heavily discount the cost of the filter for Wikipedia. This is something they could easily afford given their budget,” Sanger told FoxNews.com.

NetSpark's technology was used in 2010 with Israeli technology company Cellcom to filter the mobile web, creating a "Kosher Internet" for Jewish users. That same year the company was awarded the Seal of Approval by the National Parenting Center for its filtering technology.

Jimmy Wales, the other co-founder of the site and its current head, agrees that a filter is necessary.

“We ought to do something to help users customize their own experience of Wikipedia with respect to showing images that are not safe for work,” Wales wrote on the site in mid-July, opening up a discussion of just how to do that.

A vigorous debate about the topic appears to have ended, with no resolution in sight. A request for more information sent to Wikimedia Foundation executive director Sue Gardner by FoxNews.com was not returned.

Oddly, the Wikimedia Foundation is in favor of filters -- it simply seems paralyzed, unable to adopt a solution.

In May 2011, Wikimedia drafted a resolution on controversial content in which the foundation’s board members said they, “support the principle of user choice” as well as “the principle of least astonishment,” and urged users “to continue to practice rigorous active curation of content,” paying particular attention to potential controversial items.

The resolution also stated that the Foundation’s board urged the executive director to implement a personal image-hiding feature for users as well as encouraging the Wiki community to work with the foundation “in developing and implementing further new tools for using and curating.”

Yet 15 months after that resolution was passed, none of the solutions it calls for have been put in place. Until Wikipedia puts those filters in place, it may be tacitly endorsing the promotion and exposure of pornography to children, said Jason Stern, an attorney specializing in Internet and social media issues.

"Even if Wikipedia didn't have the budget to hire a company to install the filtering software the site so desperately needs, how can they justify their refusal to take down the offensive and disturbing images and videos being displayed on its site?" he told FoxNews.com.

“It’s an important issue. They have a social responsibility to address this,” Minchom said.