'If we learned anything from Terminator...': Americans question threat of AI

Americans share whether they're concerned by recent expert predictions that unregulated AI could turn on their human inventors and wipe out our species.

AUSTIN, Texas – Americans who spoke with Fox News questioned tech titans' recent warnings that AI's unregulated and rapid advancement could eventually kill humanity. But one man said he feared a Terminator-like ending.

"I'm not concerned," Zachary, of Austin, told Fox News. "Even if it mimics the human brain, I don't believe that it is capable of manipulating us in order to overthrow us."

VIDEO: AMERICANS CONSIDER WHETHER AI'S UNCHECKED PROGRESS COULD END HUMANITY

WATCH MORE FOX NEWS DIGITAL ORIGINALS HERE

But Joshua, a bartender, wasn't as confident.

"If we've learned anything from Terminator 2 and Skynet, it's that definitely the robots can take over at some point in time," he told Fox News. "So we have to be very wary and careful of that for sure."

Eliezer Yudkowsky, a Machine Intelligence Research Institute researcher, recently called for an "indefinite" moratorium on advanced AI training.

"If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter," he wrote in a March 29 open letter.

An illustration depicts a ChatGPT and OpenAI research laboratory logo and inscription on a smartphone screen. (Nicolas Economou/NurPhoto via Getty Images)

TECH GIANT SAM ALTMAN COMPARES POWERFUL AI RESEARCH TO DAWN OF NUCLEAR WARFARE: REPORT

Ryan, of Austin, said he doesn't envision AI becoming a danger, and instead thinks it could be a useful tool.

"I do see people taking advantage of it and businesses taking advantage of it," he told Fox News.

Tech leaders, including Tesla CEO Elon Musk and Apple co-founder Steve Wozniak, signed an open letter urging AI developers to implement a six-month pause on training systems more powerful than the current iterations and to use that time to develop safety standards. Yudkowsky said the six months was insufficient.

TECH EXPERTS SLAM LETTER CALLING FOR AI PAUSE THAT CITED THEIR RESEARCH: ‘FEARMONGERING’

Zachary, meanwhile, thinks there's already "a lot of safeguards in place" in terms of AI development.

"I do believe there's a limit on the ability that AI has in our world," he told Fox News.

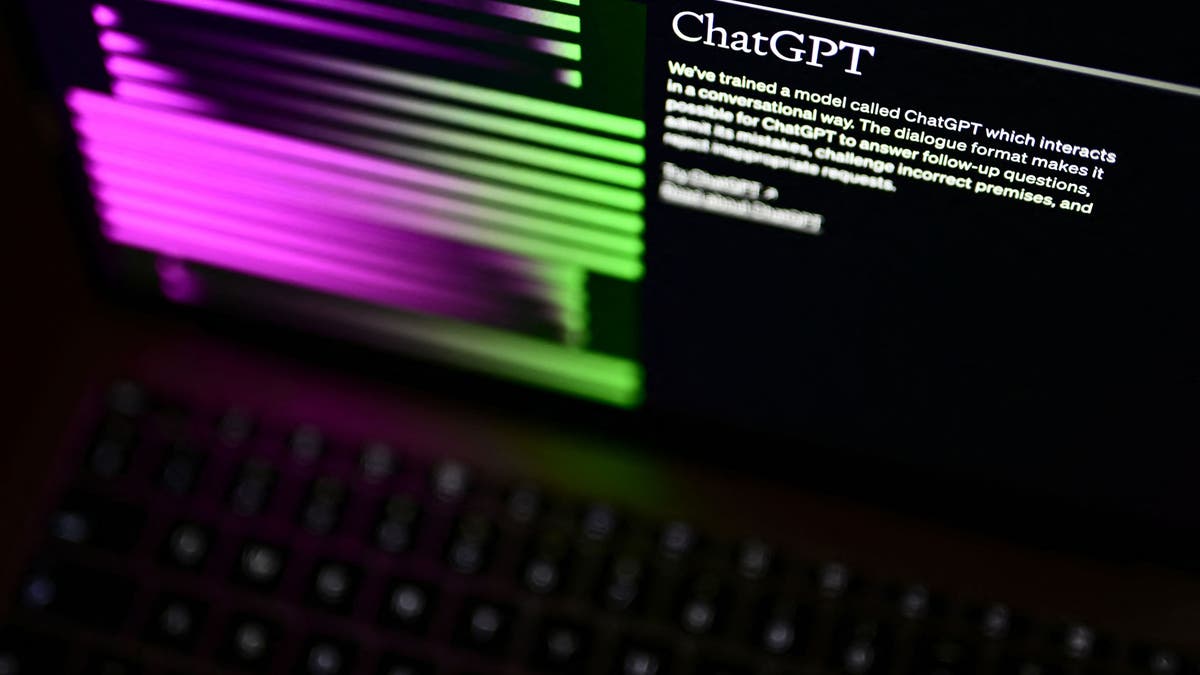

GPT-4, an advanced chatbot, exhibits "human-level performance" on some standardized tests, including an ability to perform at the 90th percentile on a simulated bar exam, according to its creator, OpenAI. The tech leaders pushing for the pause said AI systems with "human-competitive intelligence" could potentially outsmart and replace humans.

A computer screen depicts OpenAI's website, displaying its ChatGPT software. (MARCO BERTORELLO/AFP via Getty Images)

An Austin resident working in cybersecurity said he hopes AI could eventually help humans carry out unsafe jobs or replace them in the roles altogether.

"I would hope that they do make many highly dangerous jobs obsolete and help people who fight fires, dispose of bombs, make their job a whole lot easier and safer," he told Fox News.

RESEARCHERS PREDICT WHICH JOBS MAY BE SAFE FROM A.I. EXPOSURE

Leigh, a lawyer, said AI should have human oversight.

"Whatever system you put AI operating on, you still need human oversight because AI doesn't have the ability to have ethical or moral reasoning," the Austinite told Fox News. "It's going to follow the program."

"You certainly wouldn't want AI in charge of nuclear war or bombs," Leigh said.

Joshua felt mild trepidation at the thought of AI's potential.

"The fact that … the technology is there is slightly unnerving," he told Fox News.

OpenAI released ChatGPT on Nov. 30, 2022. (Beata Zawrzel/NurPhoto via Getty Images)

CLICK HERE TO GET THE FOX NEWS APP

Ryan said he didn't think AI could supersede humanity.

"I'm not really that concerned," he told Fox News. "I don't think it's going to take over any of us at all."

To hear more people consider whether AI's unchecked progress could kill humanity, click here.