Does artificial intelligence pose a threat to humanity?

Fox News correspondent Grady Trimble has the latest on fears the technology will spiral out of control on 'Special Report.'

Artificial intelligence is already revolutionizing law enforcement, which has implemented advanced technology in their investigations, but "society has a moral obligation to mitigate the detrimental consequences," a recent study says.

AI is in its teenage years, as some experts have said, but law enforcement agencies are already integrating predictive policing, facial recognition and technologies designed to detect gunshots into their investigations, according to a North Carolina State University report published in February.

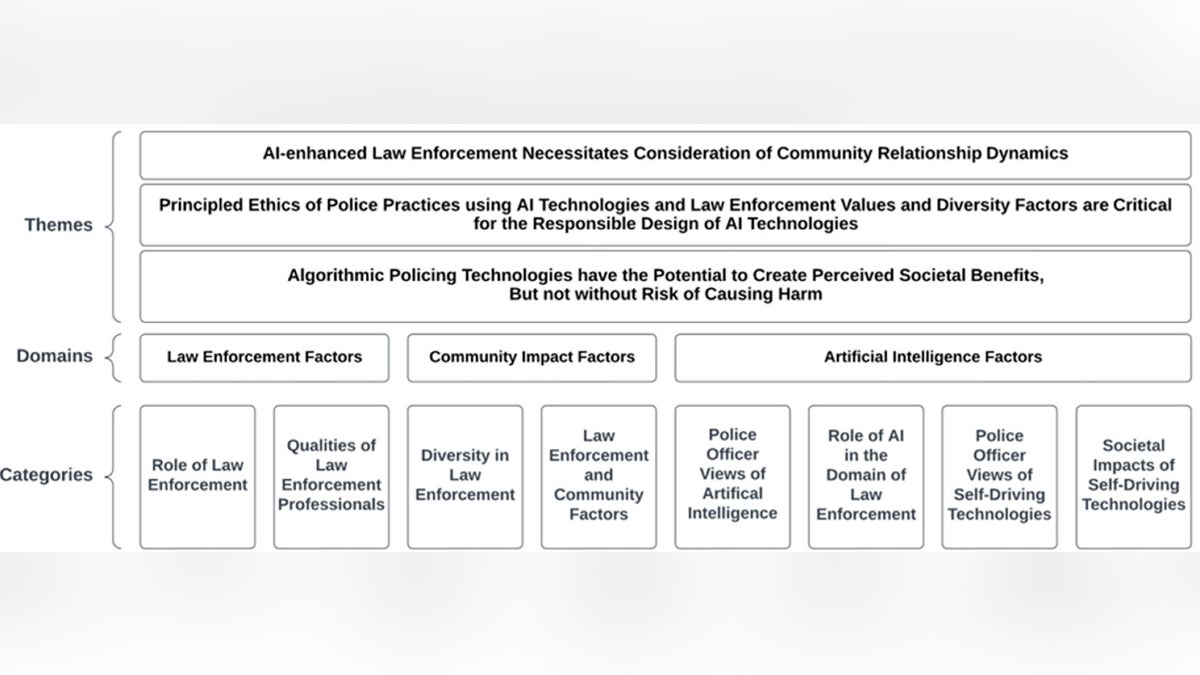

The report was based on 20 semi-structured interviews of law enforcement professionals in North Carolina, and how AI impacts the relationships between communities and police jurisdictions.

"We found that study participants were not familiar with AI, or with the limitations of AI technologies," said Jim Brunet, a co-author of the study and director of NC State’s Public Safety Leadership Initiative.

AI MIGHT HAVE PREVENTED BOSTON MARATHON BOMBING, BUT WITH RISKS: FORMER POLICE COMMISSIONER

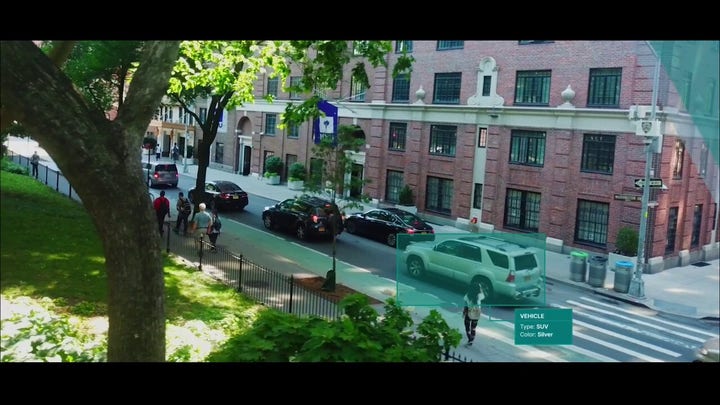

Crowd of business people tracked with technology walking on busy urban city streets. CCTV AI facial recognition big data analysis interface scanning, showing animated information. (Getty Images)

"This included AI technologies that participants had used on the job, such as facial recognition and gunshot detection technologies," he said. "However, study participants expressed support for these tools, which they felt were valuable for law enforcement."

Law enforcement officials believe AI will improve public safety but could erode trust between police and civilians, according to the study.

This comes at a time when American cities are wrestling with this politically divisive issue of curtailing crime while regaining the public's trust in the wake of George Floyd's murder at the hands of disgraced police officers.

Ed Davis, who was the police commissioner during the Boston Marathon bombing in 2013, told Fox News Digital AI "will ultimately improve investigations and allow many dangerous criminals to be brought to justice."

ARTIFICIAL INTELLIGENCE: FREQUENTLY ASKED QUESTIONS ABOUT AI

Now-retired Boston Police Commissioner Edward Davis III testifying on Capitol Hill in Washington, on July 10, 2013, before the Senate Homeland Security and Governmental Affairs Committee hearing to review the lessons learned from the Boston Marathon bombings. (AP Photo/J. Scott Applewhite)

But it comes with risks and pitfalls, Davis said, and criminals will have access to the same technology, which could negatively impact police investigations.

The well-respected commissioner's comments are backed by the study's findings.

REGULATION COULD ALLOW CHINA TO DOMINATE IN THE AI RACE, EXPERTS WARN: ‘WE WILL LOSE’

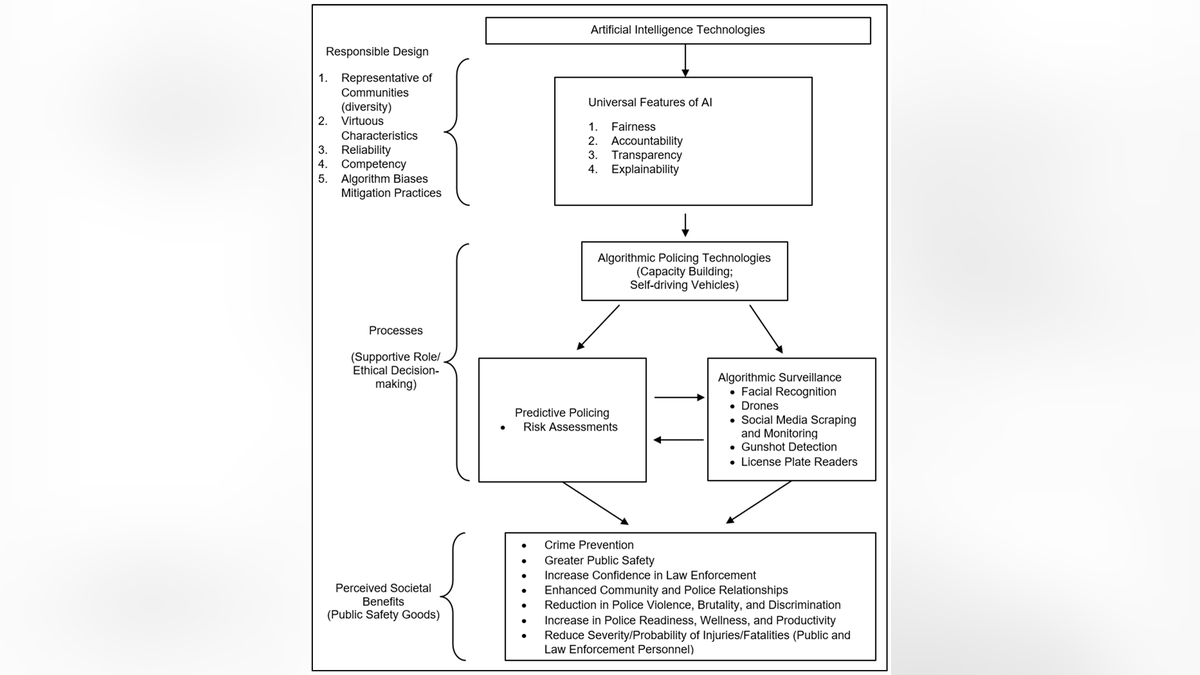

"Policymaking guided by public consensus and collaborative discussion with law enforcement professionals must aim to promote accountability through the application of responsible design of AI in policing with an end state of providing societal benefits and mitigating harm to the populace," the study concludes.

"Society has a moral obligation to mitigate the detrimental consequences of fully integrating AI technologies into law enforcement."

Part of the issue is police officers' general lack of knowledge about AI's capabilities and how they work, said Ronald Dempsey, the first author of the study and a former graduate student at NC State.

That "makes it difficult or impossible for them to appreciate the limitations and ethical risks," Dempsey said. "That can pose significant problems for both law enforcement and the public."

'GODFATHER OF ARTIFICIAL INTELLIGENCE' SAYS AI IS CLOSE TO BEING SMARTER THAN US, COULD END HUMANITY

Law enforcement's use of facial recognition boomed after the Jan. 6, 2021 Capitol riot.

Twenty out of 42 federal agencies that were surveyed by the Government Accountability Office in 2021 reported they use facial recognition in criminal investigations.

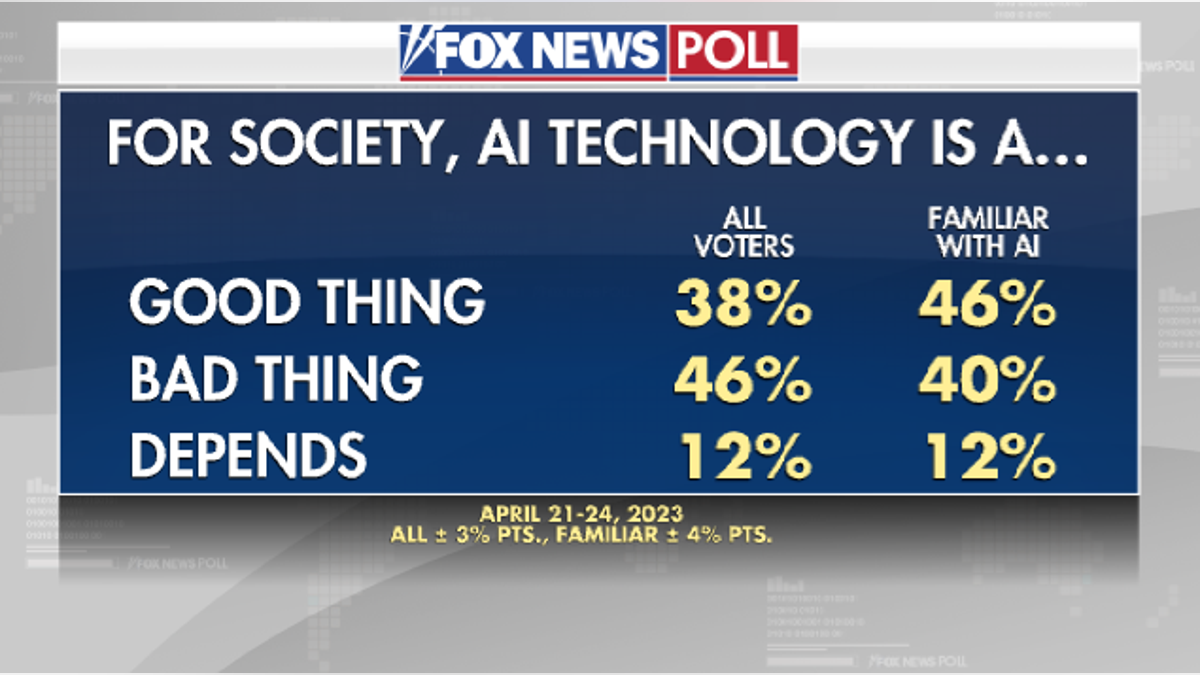

A Fox News poll on AI. (Fox News)

If the emerging AI technologies "are well-regulated and carefully implemented," a public safety good "can potentially increase community confidence in policing and the criminal justice system," the study found.

"However, the study participants expressed concerns about the risks of algorithm bias (diversity and representativeness challenges), the challenge of replicating the human factor of empathy, and concerns about privacy and trust.

"In addition, fairness, accountability, transparency, and explainability challenges remain as presented in the broader academic debate," the study says.

AI has the power to bridge or deepen the divide between police and the public, according to the study, which said it's essential that law enforcement leaders have a seat at the table for all talks about framework for how police can use the tech.

Veljko Dubljević, corresponding author of the study and an associate professor at North Carolina State University, said the guidelines can be used to inform AI decision-making.

"It’s also important to understand that AI tools are not foolproof," Dubljević said. "AI is subject to limitations. And if law enforcement officials don’t understand those limitations, they may place more value on the AI than is warranted – which can pose ethical challenges in itself."

Police have already made mistakes using facial recognition that led to wrongful arrests.

AI algorithms falsely identified African American and Asian faces 10 to 100 times more than White faces, according to a 2019 study by the National Institute of Standards and Technology.

Addressing ethical and societal concerns by responsible design in AI policing technologies. This figure captures the findings from the study and synthesizes a framework with existing literature for addressing the ethical and societal concerns in AI policing by responsible design. (North Carolina State University)

"There are always dangers when law enforcement adopts technologies that were not developed with law enforcement in mind," Brunet said.

"This certainly applies to AI technologies such as facial recognition. As a result, it’s critical for law enforcement officials to have some training in the ethical dimensions surrounding the use of these AI technologies."

HOW US, EU, CHINA PLAN TO REGULATE AI SOFTWARE COMPANIES

The study emphasized creating a transparent culture of accountability that shows how AI technologies are being used in police investigations.

A recent New York Times report about a wrongful arrest based on facial recognition showed court documents and police reports didn't include any reference to the use of the AI tech, a practice that is reportedly becoming more prevalent.

Overarching themes in law enforcement and artificial intelligence technologies. (North Carolina State University)

"As a final point, AI policing technologies must be explainable, at least generally, in how decisions are reached," the NC State study said.

"Law enforcement professionals should, at a minimum, have a broad understanding of the AI technologies used in their jurisdictions and the criminal justice system as a whole. Procedural training for police officers who employ artificial intelligence technology."

CLICK HERE TO GET THE FOX NEWS APP

The study was focused on North Carolina and is intended as a "snapshot" of an emerging trend and requires more research and education for law enforcement professionals.