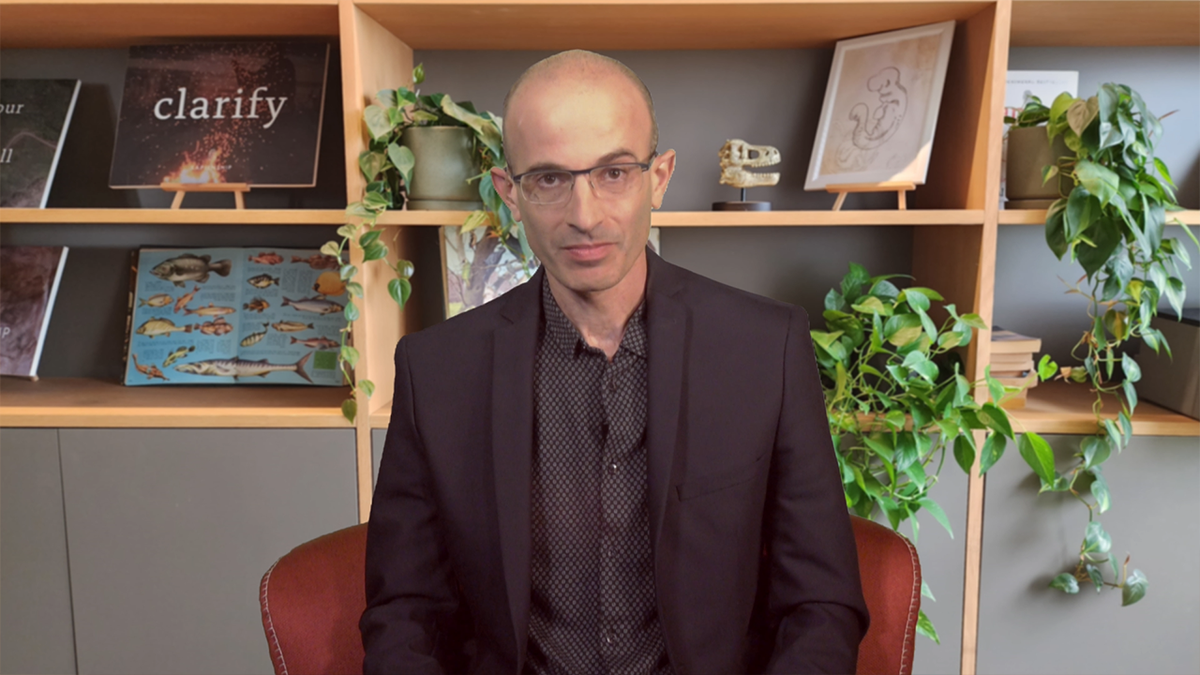

Yuval Noah Harari talks signing on to AI letter with Elon Musk, dangers of the technology

The Israeli author and historian said a lack of safety measures in new AI tech could cause the West to lose to China.

Israeli historian and "Sapiens" author Yuval Noah Harari claimed there is no kill switch for artificial intelligence (AI) and urged for the implementation of safety checks and guardrails, or else risk the possibility of societal collapse.

During a March interview with ABC News, OpenAI CEO Sam Altman was asked if ChatGPT had a "kill switch" in the event their AI went rogue. Altman's responded with a quick "yes."

"What really happens is that any engineer can just say we're going to disable this for now. Or we're going to deploy this new version of the model," he added.

But Harari disagreed.

"When you produce these tools, as long as you haven't released them into the public sphere, you can make all kinds of kill switches," Harari said. "But once you release them into the public sphere, people start to depend on them for their livelihood, for their social relations, for their politics. It's too late. You cannot pull the switch because the switch will cause economic collapse."

MUSK ON AI REGULATION: 'IT'S NOT FUN TO BE REGULATED' BUT ARTIFICIAL INTELLIGENCE MAY NEED IT

Israeli historian and "Sapiens" author Yuval Noah Harari warned that the judicial overhaul plan in Israel could turn the country into a dictatorship if passed. (Fox News)

According to Harari, recent technological revolutions have exacerbated social disparities and led to political turmoil. However, he said that anguish is "nothing" compared to what could await society in the next few years.

"If we don't take care of it, then some people will become extremely rich and powerful because they control the new tools and other people could become part of a new 'useless class.' I use this very strong language of a useless class. I know people feel it's terrible to talk like this," Harari said. "Obviously, people are never useless from the viewpoint of their family or friends or community, but from the viewpoint of the economic system, they could become, we don't need them. They have no skills that we need anymore."

Harari described the possibility as a "terrible danger," which underscored the need to protect people before it's too late.

Experts have taken numerous positions on the rapid development of AI. Some have advocated for the technology to continue to evolve and be pushed to consumers in the United States in an effort to stay competitive with other governments, such as the Chinese Communist Party.

"Artificial intelligence poses a direct threat to humanity, but it'll become even more a threat to humanity if China masters it before we do," China expert Gordon Chang recently told Fox News. "We see this, for instance, in the gene editing of humans."

Sam Altman speaks at the Wall Street Journal Digital Conference in Laguna Beach, California, U.S., October 18, 2017. (REUTERS/Lucy Nicholson/File Photo)

But China has enacted new regulations on deep-synthesis technology available on AI platforms yet to be established in the U.S. In January, the Chinese Cyberspace Administration placed substantial restrictions on AI-generated content, requiring that these forms of media carry unique identifiers, such as watermarks.

Elon Musk, Steve Wozniak, Harari and other tech leaders signed a letter in March asking AI developers to "immediately pause for at least six months the training of AI systems more powerful than GPT-4."

"What will cause the West to lose to China is if we continue releasing these kinds of powerful tools into our public sphere without any safety measures because they destroy the foundations of our society, they destroy the foundations of democracy," Harari told Fox News Digital.

Harari said he does not want to stop the development of all AI but calls to put a pause on the release of extremely powerful AI tools to the public sphere.

He compared his opinion on AI to developing a new medicine, wherein law and medical standards obligate a company to undergo a rigorous safety check to determine the product's safety in the short and long term.

"What would you say if you had biotech labs engineering new viruses and just releasing them to the public sphere simply to impress their shareholders with their enhanced capabilities and push up the value of their shares," Harari said. "We would say this is absolutely insane. These people should be in jail."

Harari believes AI has the potential to be "much more powerful" than any virus and expressed concern that researchers develop these extremely powerful tools and quickly release them without safety checks.

GOOGLE CEO ADMITS HE, EXPERTS ‘DON’T FULLY UNDERSTAND' HOW AI WORKS

Close-up of the icon of the ChatGPT artificial intelligence chatbot app logo on a cellphone screen. Surrounded by the app icons of Twitter, Chrome, Zoom, Telegram, Teams, Edge and Meet. (iStock)

"At the most basic level, AI is not like any tool in human history. All previous tools in human history that we invented empowered us because decisions on how to use the tools always remained in human hands," Harari added. "If you invent a knife, you decide whether you use it to murder somebody or to save their life in surgery or cut salad. The knife doesn't decide."

He also compared AI to the radio or the atomic bomb. Radio does not decide what to broadcast and a nuclear weapon does not determine who to bomb. There is always a human element, whereas AI is the "first tool in human history" that can make decisions by itself, about its own usage and about life, Harari said.

In a recent New York Times opinion piece, Harari described social media as the "first contact" between AI and humanity, an approach that he claimed humanity lost.

When asked to discuss this position further, Harari noted that human beings generate social media content, while AI curates the content and the algorithm decides what to show the user. Harari said this primitive AI has "completely destabilized our society" merely by getting handed a simple task to maximize user engagement.

"The AI discovered that the best way to grab people's attention and keep them on the platform is to press the emotional buttons in their brain to generate anger and hatred and fear," Harari said. "Then you have this epidemic of anger and fear and hatred in society which destabilizes our democracy."

The new generation of AI, like ChatGPT and GPT 4, they don't just curate human content. They can generate the content themselves.

ALTERNATIVE INVENTOR? BIDEN ADMIN OPENS DOOR TO NON-HUMAN, AI PATENT HOLDERS

Harari said to imagine a society where most of the stories, songs and images are created by a non-human intelligence that understands how to manipulate our emotions.

But Harari asserted it is not all negative. AI can help us have better healthcare, treat cancer and help humanity solve a variety of problems. To achieve this without negative ramifications, Harari floated a variety of safety measures.

One of the ways to combat disinformation, Harari suggested, is to reinforce the need for trustworthy institutions. In today's modern world, various individuals and companies can write anything, regardless of whether it is based on objective reality. They can also produce deepfakes, fake videos of real people.

"It looks exactly like Trump or Biden. They sound exactly like Trump or Biden. But you can't trust it. Because you now know. Well, they can generate anything. So, what can you trust? You trust the publisher. You trust the institution," Harari said.

CLICK HERE TO GET THE FOX NEWS APP

He also suggested further regulation or laws on data privacy. For example, when a person goes to their physician and tells them something very private, the physician is not allowed to take this information and sell it for money to a third party to use against the patient or manipulate them without consent.

Data acquisition and sales are among the most significant ways Big Tech companies generate profit. They also use that data to curate content and sell products to consumers. Harari said similar rules in the medical field should apply to the tech field.

"We cannot trust the people that develop the technology to supervise themselves. They don't represent anybody," Harari said. "We never voted for them. They do not represent us. What gives them the power to make maybe the most important decisions in human history?"