AI gives birth to AI: Scientists say tech can now replicate without humans

A group of scientists from across the U.S. claim to have created the first artificial intelligence capable of generating AI without human supervision.

A Google artificial intelligence system gave patients more accurate diagnoses and provided better bedside manner than traditional doctors, a recent study by the tech giant found.

Actors portraying patients, unaware whether they were texting with real doctors or Google's Articulate Medical Intelligence Explorer (AMIE) overall preferred how the AI handled their medical conditions, according to the study, which was published Jan. 11 on the scholarly distribution site arXiv. A panel of doctors, meanwhile, also found AMIE to be more accurate at diagnosing the patients than actual physicians.

"To our knowledge, this is the first time that a conversational AI system has ever been designed optimally for diagnostic dialogue and taking the clinical history," Alan Karthikesalingam, a clinical research scientist at Google Health in London and a co-author of the study, told the scientific journal Nature on Friday.

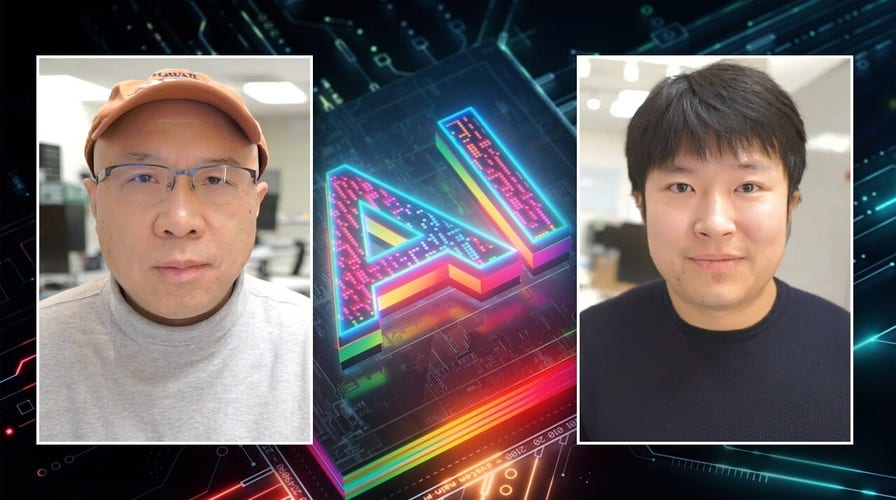

Google's AI-generated system, the Articulate Medical Intelligence Explorer, outperformed primary care physicians on multiple evaluation criteria for diagnostic dialogue, including using higher empathy in conversations. (Fox News)

In the study, which has not been peer-reviewed, AMIE went head-to-head against 20 board-certified clinicians in diagnosing 20 actors impersonating patients exclusively through text conversations, though the actors weren't told whether they were chatting with a human or an AI bot. The fake patients were then asked to evaluate their experiences across 26 metrics.

Across 149 simulations, the actors preferred the AI system over traditional doctors in 24 of those metrics, including in empathy.

At the same time, a panel of specialist physicians also evaluated AMIE across 32 metrics. AMIE, which is based on Google's large language model, scored higher than the diagnosing doctors in 28.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

"Medicine is just so much more than collecting information — it’s all about human relationships," Adam Rodman, an internal medicine physician at Harvard Medical School, told Nature. He noted that AMIE could be a useful tool, but that interactions with doctors was still essential.

Google developed an artificial intelligence system aimed at engaging and diagnosing medical patients similar to human doctors. (Storyblocks/Getty Images)

Karthikesalingam noted that restricting physicians to text-based consultations could have harmed their performance if they weren't as familiar with such an interface.

"The physician-patient conversation is a cornerstone of medicine, in which skilled and intentional communication drives diagnosis, management, empathy and trust," Karthikesalingam and fellow Google Health researcher Vivek Natarajan wrote in a blog post Friday. "AI systems capable of such diagnostic dialogues could increase availability, accessibility, quality and consistency of care by being useful conversational partners to clinicians and patients alike."

"But approximating clinicians’ considerable expertise is a significant challenge," they added.

AI GIVES BIRTH TO AI: SCIENTISTS SAY MACHINE INTELLIGENCE NOW CAPABLE OF REPLICATING WITHOUT HUMANS

Google researchers involved in a study about their new artificial intelligence system optimized for diagnostic dialogue said the chatbot could eventually make health care more accessible worldwide. (Getty Images)

The pair wrote that AMIE is "early experimental-only work, not a product, and has several limitations." Additional, rigorous studies, they said, are necessary.

CLICK HERE TO GET THE FOX NEWS APP

"AMIE is our exploration of the 'art of the possible,' a research-only system for safely exploring a vision of the future where AI systems might be better aligned with attributes of the skilled clinicians entrusted with our care," Karthikesalingam and Natarajan wrote.

Google did not respond to a request for comment.