AI-powered tech combatting rising sextortion scams

Yaron Litwin, executive of AI-powered company Canopy, explains how "AI can be used for good" in an ongoing chess match with criminals using deepfakes in sextortion scams.

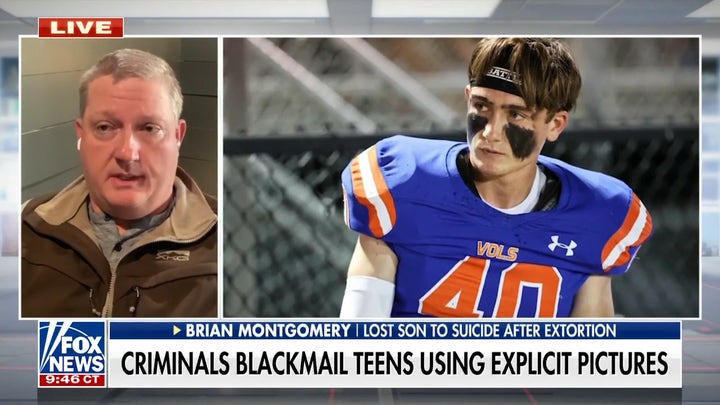

This story discusses suicide. If you or someone you know is having thoughts of suicide, please contact the Suicide & Crisis Lifeline at 988 or 1-800-273-TALK (8255).

A company's reliance on artificial intelligence to prevent sextortion scams sets up an AI vs. AI clash between criminals and "good guys."

Sextortion cases increased 322% between February 2022 and February 2023, according to the FBI, which recently said there's been an additional significant uptick since April.

Innocent beach pictures or men's bare-chested gym pictures can be twisted into sexually explicit, AI-generated "deepfakes" that are weaponized against panicked and embarrassed teens and preteens.

Cases have resulted in an alarming number of suicides by victims, experts said, but AI-powered tech can detect anything it considers "sexually explicit" and block it from ever seeing the light of day.

AI ‘DEEPFAKES’ OF INNOCENT IMAGES FUEL SPIKE IN SEXTORTION SCAMS: FBI

A representation of the "Calculated" character Jo, created using artificial intelligence. (OneDoor Studios)

Yaron Litwin, executive of Canopy, developed AI software that blocks these types of images – even innocent bathing suit pictures from the beach – from ever being sent out and alerts the parents.

"Our technology is an AI platform that has been developed over 14 years … and can recognize images with video and an image in split seconds," Litwin told Fox News Digital.

"The platform itself will also filter out in real time any explicit image as you're browsing through a website or an app and really prevent a lot of pornography from appearing online."

LAWMAKER EXPOSES DANGERS OF ‘SEXTORTION’ AFTER TEENAGE SON'S SUICIDE

It's essentially an extra layer of protection that stops naive children and teens from sharing even innocent pictures or videos that criminals can use against them.

"We like to call it AI for good," Litwin said. "It's a way to protect our kids."

A company's reliance on artificial intelligence to prevent sextortion scams sets up an AI vs. AI clash between criminals and "good guys." (iStock)

He said they'll also work with the FBI to filter sexual abuse material and give investigators tools to protect the FBI agent's mental health from having to look at disturbing image/video after disturbing image/video.

"There's no need to do this manually," Litwin said. "In split seconds, the AI can help identify if there is improper material that they're dealing with."

WHO IS WATCHING YOU? AI CAN STALK UNSUSPECTING VICTIMS WITH ‘EASE AND PRECISION’: EXPERTS

This is a simplified example of the ongoing chess match that AI developers and users will face as the technology advances and becomes more widely used and prevalent.

Litwin said his company is exploring how to differentiate between "real" images and AI-generated fakes.

What is sextortion?

The FBI describes sextortion as a crime that "involves coercing victims into providing sexually explicit photos or videos of themselves, then threatening to share them publicly or with the victim's family and friends."

"Malicious actors use content manipulation technologies and services to exploit photos and videos – typically captured from an individual's social media account, open internet or requested from the victim – into sexually-themed images that appear true-to-life in likeness to a victim, then circulate them on social media, public forums or pornographic websites," the FBI said in a June 5 PSA.

"Many victims, which have included minors, are unaware their images were copied, manipulated and circulated until it was brought to their attention by someone else." At least a dozen sextortion-related suicides have been reported across the country, according to the latest FBI numbers from this year.

WATCH INTERVIEW WITH EXPERT ALICIA KOZAK

Many victims are males between the ages of 10 and 17, although there have been victims as young as 7, the FBI said. Girls have also been targeted, but the statistics show a higher number of boys have been victimized.

Last July, 17-year-old Gavin Guffey received a message from someone posing as a girl on Instagram one evening, and the pair began chatting on the social media app owned by Facebook's parent company, Meta.

That person convinced Gavin to turn on "vanish mode" in their Instagram chat, which allows messages to disappear after they are received. They then shared photos, his father, South Carolina Republican state Rep. Brandon Guffey, told Fox News Digital in a previous interview.

That led to demands for money and escalated until Gavin ended his life.

FATHER TALKS ABOUT LOSING HIS SON AFTER SEXTORTION SCAM

Sextortion isn't new, but the number of cases has boomed since the pandemic. From 2021 to 2022, the FBI recorded a 463% increase in reported sextortion cases, and now open-source AI tools have simplified the process for predators, the FBI said.

And, as the bureau notes, reported numbers don't tell the whole story because many victims feel shame and do not file reports, so the number of cases could actually be much higher.

Find help navigating ‘scary situation’

Michelle DeLaune, president and CEO of the National Center for Missing & Exploited Children, said in a previous statement that young victims of this crime "feel like there's no way out."

"But we want them to know that they’re not alone. In the past year, the National Center for Missing & Exploited Children has received more than 10,000 sextortion-related reports," DeLaune said this year. "Please talk to your children about what to do if they (or their friends) are targeted online. NCMEC has free resources to help them navigate an overwhelming and scary situation."

CLICK HERE TO GET THE FOX NEWS APP

NCMEC also provides a free service called "Take It Down," which works to help victims remove or stop the online sharing of sexually explicit images or videos.

The website is: https://takeitdown.ncmec.org/.

The FBI provides recommendations for sharing content online as well as resources for extortion victims at https://www.ic3.gov/Media/Y2023/PSA230605/.

The FBI also urges victims to report exploitation by calling the local FBI field office, calling 1-800-CALL-FBI (1-800-225-5324) or report it online at tips.fbi.gov/.